Incorporating Rich Social Interactions Into MDPs

As we aim to enable robots to engage socially with other agents, as much as we do as humans, there is a need for a rich theory of social interactions. We formalize this by extending Social MDPs where agents reason about the arbitrary functions of each others hidden rewards with different levels of reasoning. The extended Social MDPs encode five basic social interactions: cooperate, conflict, competition, coercion and exchange and can produce actions that are close to human judgements.

Scenarios

We apply the extended Social MDP framework to a multi-agent gridworld which consists of two agents (a yellow robot and red robot), two physical landmarks (a construction site and a tree) and three objects (an axe, wooden log, and a water bucket). Physical goals consist of moving the desired objects to one of the landmarks. Agents can have no social goal or one of the five social goals: cooperation, conflict, competition, coercion, or exchange - leading to 2x6x6 = 72 scenarios. See all scenarios for the list of all experimental scenarios.

| Level 1 | Level 2 |

|

|

Example Demonstration

Red robot's goals Social:Conflict Physical:Tree

Yellow robot's goals Social:None Physical:Construction Site

Using extended Social MDP at different levels of reasoning, Yellow robot estimates the physical and social goal of the red robot, and takes optimal actions in order to reach its goal.

Results

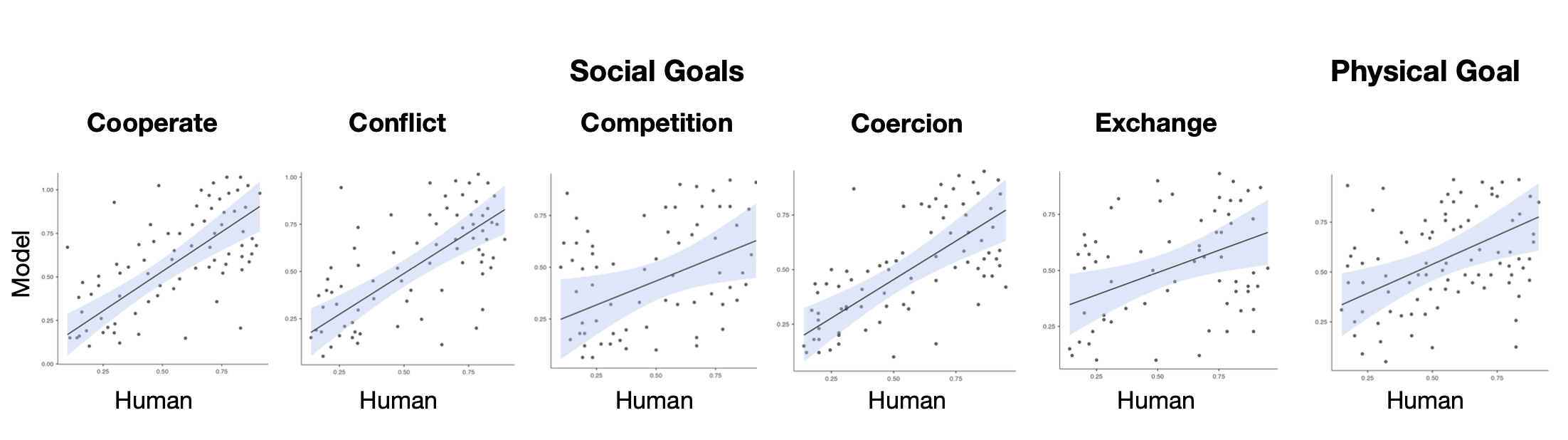

Humans and our model scored 72 scenarios according to how likely each social interaction was and how likely one of the physical goals was. The straight line is the best linear fit and the light blue band represents the 95% confidence interval. Our model agrees with humans and predicts their confidence scores for both social interactions and the physical goal. See results for all scenarios.

Paper

The paper is currently under review at the 39th IEEE Conference on Robotics and Automation (ICRA 2022). Refer to the latest version of the paper.

Code

Refer to the Social-Interactions-MDP repository for the codebase.